📜 Biography

I am a research in Shanghai AI Lab, collaborating closely with Dr. Kaipeng Zhang and Dr. Wenqi Shao. I recevied my Ph.D. degree in 2025 from Beijing Institute of Technology (BIT), won Excellent PhD studental Dissertation Award of BIT, advised by Prof. Yuwei Wu and Prof. Yunde Jia; Master degree in 2020 from Northeastern University supervised by Prof. Shukuan Lin; and Bachlor degree in 2017 from Harbin University of Science and Technology.

My research area lies at:

- vision-and-language

- image/video generation

- internet-augmented generation

- compositional generalization

🎓 Education

- 2020.09 - 2025.03,

Ph.D. in CS, Beijing Institute of Technology, Beijing, China

Ph.D. in CS, Beijing Institute of Technology, Beijing, China - 2017.09 - 2020.01,

Master in CS, Northeastern University, Shenyang, Liaoning, China

Master in CS, Northeastern University, Shenyang, Liaoning, China - 2013.09 - 2017.06,

Bachelor in CS, Harbin University of Science and Technology, Harbin, Heilongjiang, China

Bachelor in CS, Harbin University of Science and Technology, Harbin, Heilongjiang, China

⚡ Preprint

* indicates equal contribution

+ indicates corresponding author

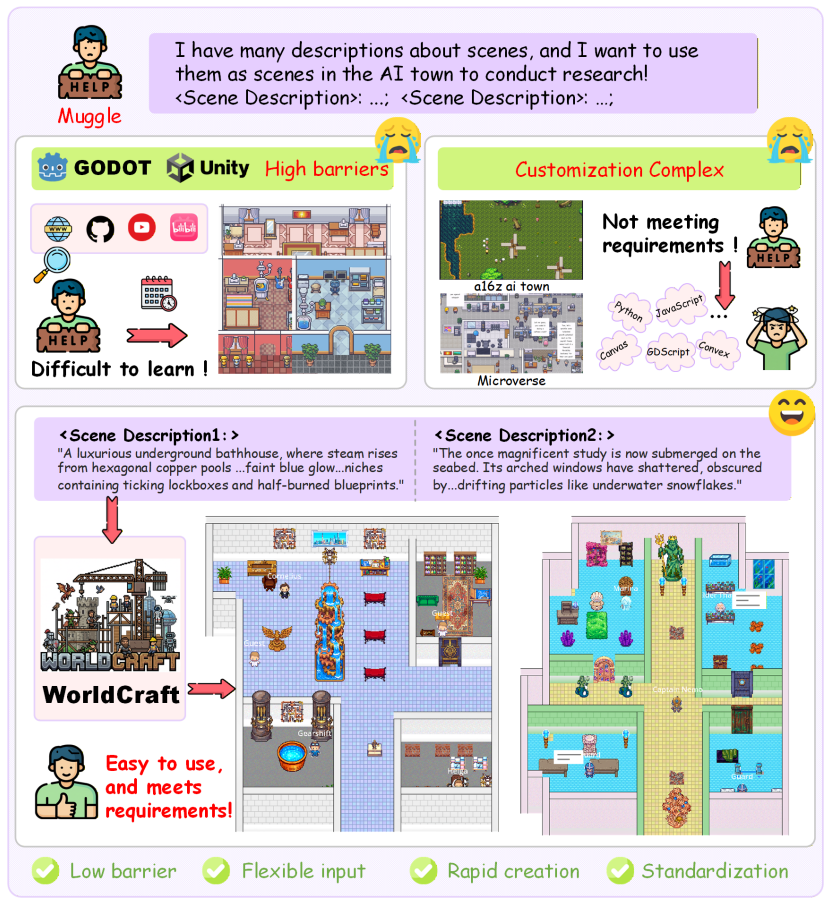

MeepleLM: A Virtual Playtester Simulating Diverse Subjective Experiences

- Zizhen Li,

Chuanhao Li, Yibin Wang, Yukang Feng, Jianwen Sun, Jiaxin Ai, Fanrui Zhang, Mingzhu Sun, Yifei Huang, and Kaipeng Zhang+. - [arXiv 2026] [paper]

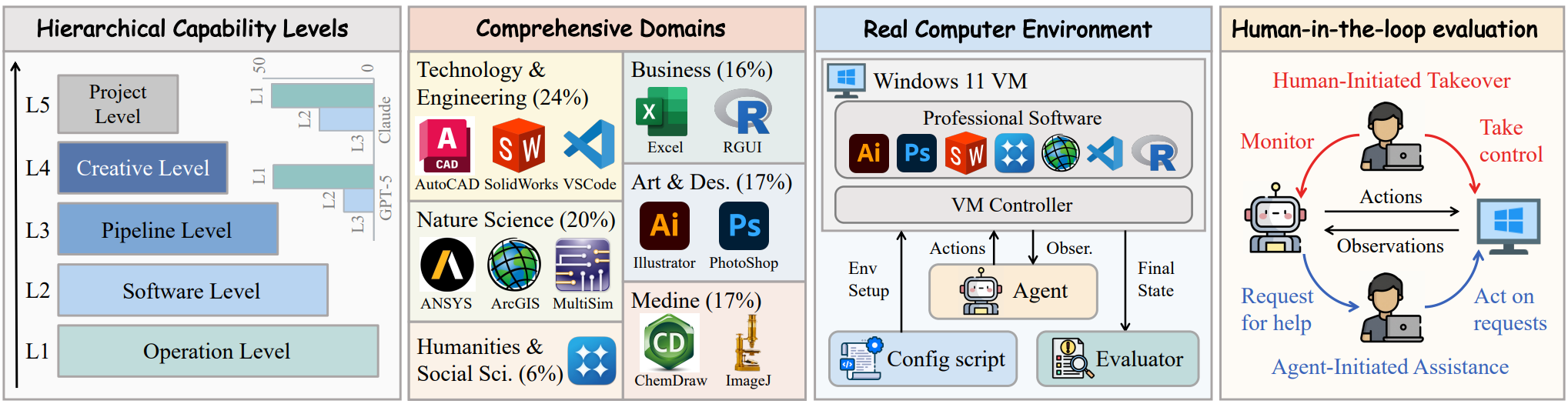

ProSoftArena: Benchmarking Hierarchical Capabilities of Multimodal Agents in Professional Software Environments

- Jiaxin Ai, Yukang Feng, Fanrui Zhang, Jianwen Sun, Zizhen Li,

Chuanhao Li, Yifan Chang, Wenxiao Wu, Ruoxi Wang, Mingliang Zhai, and Kaipeng Zhang+. - [arXiv 2025] [paper]

Yume1.5: A Text-Controlled Interactive World Generation Model

- Xiaofeng Mao, Zhen Li,

Chuanhao Li, Xiaojie Xu, Kaining Ying, Tong He, Jiangmiao Pang, Yu Qiao, and Kaipeng Zhang+. - [arXiv 2025] [paper]

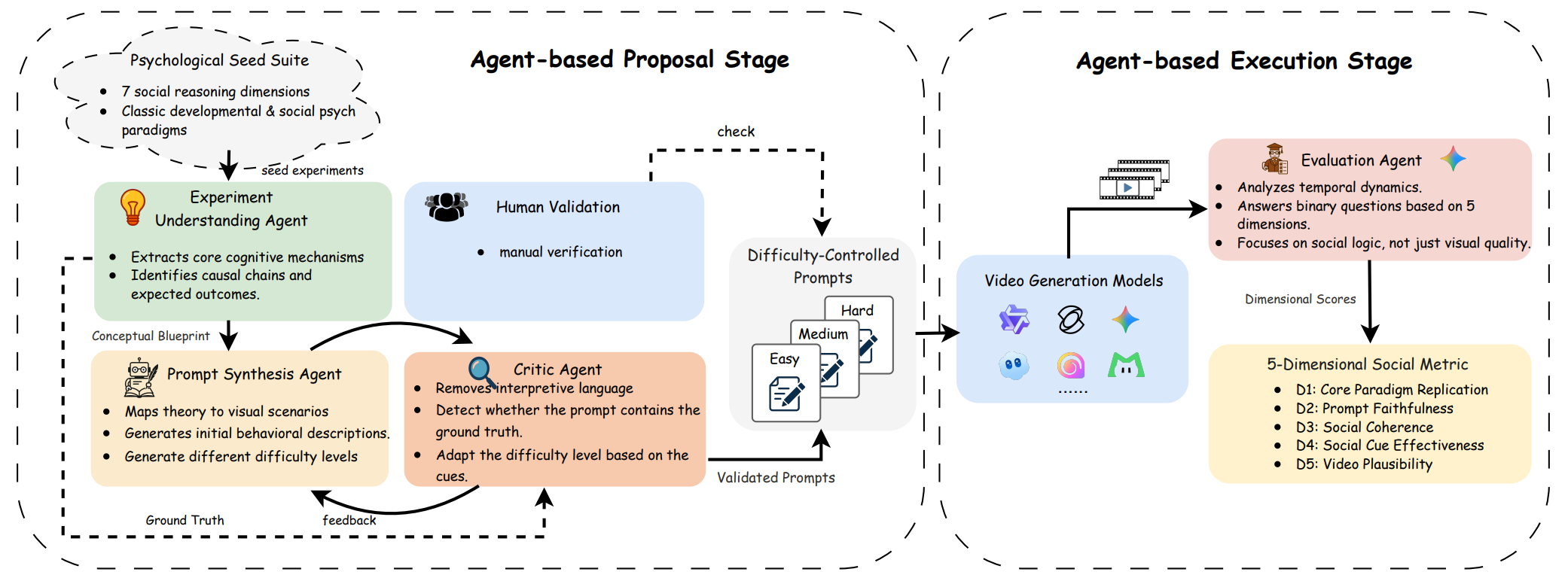

SVBench: Evaluation of Video Generation Models on Social Reasoning

- Wenshuo Peng, Gongxuan Wang, Tianmeng Yang,

Chuanhao Li, Xiaojie Xu, Hui He, and Kaipeng Zhang+. - [arXiv 2025] [paper]

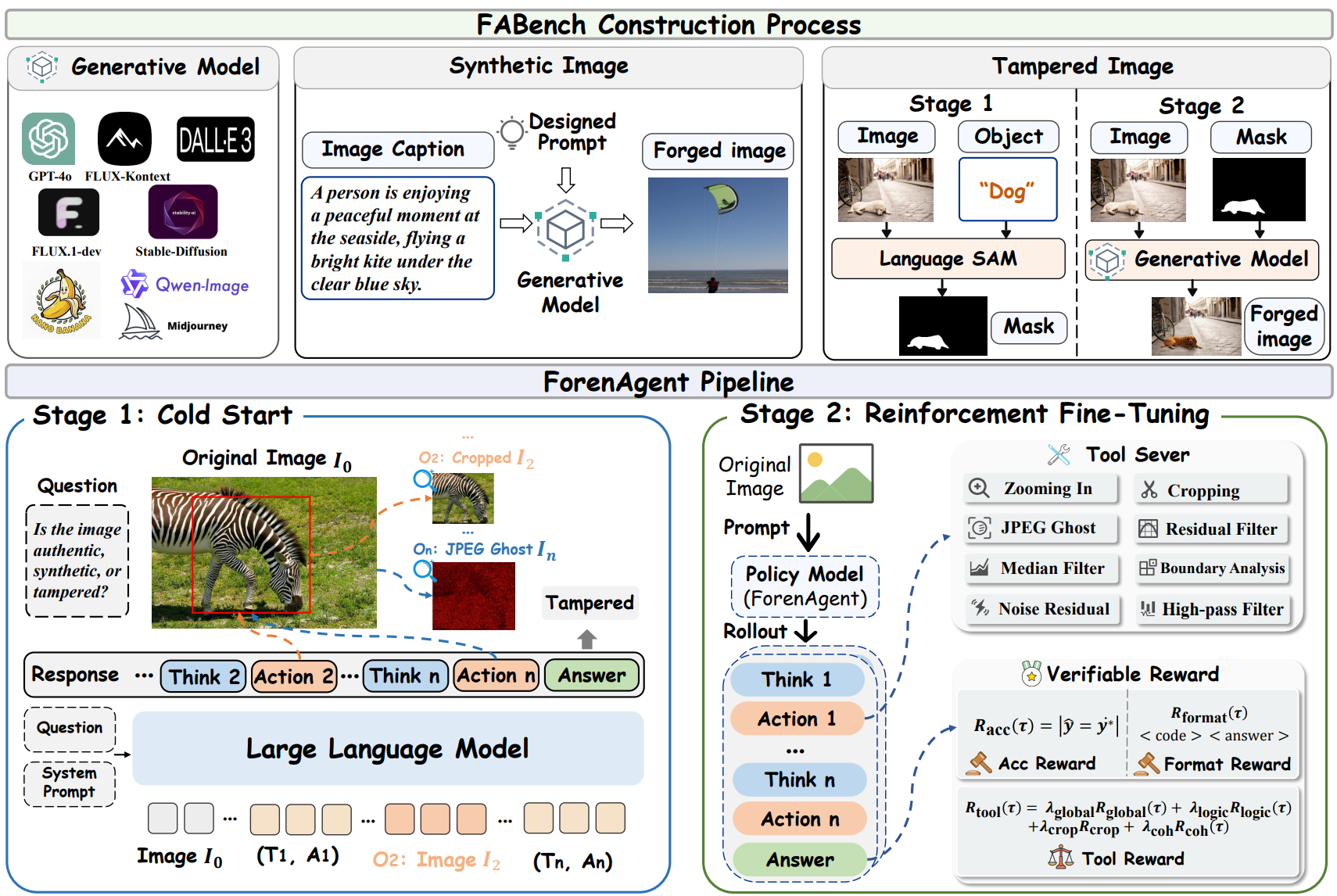

Code-in-the-Loop Forensics: Agentic Tool Use for Image Forgery Detection

- Fanrui Zhang, Qiang Zhang, Sizhuo Zhou, Jianwen Sun,

Chuanhao Li, Jiaxin Ai, Yukang Feng, Yujie Zhang, Wenjie Li, Zizhen Li, Yifan Chang, Jiawei Liu, and Kaipeng Zhang+. - [arXiv 2025] [paper]

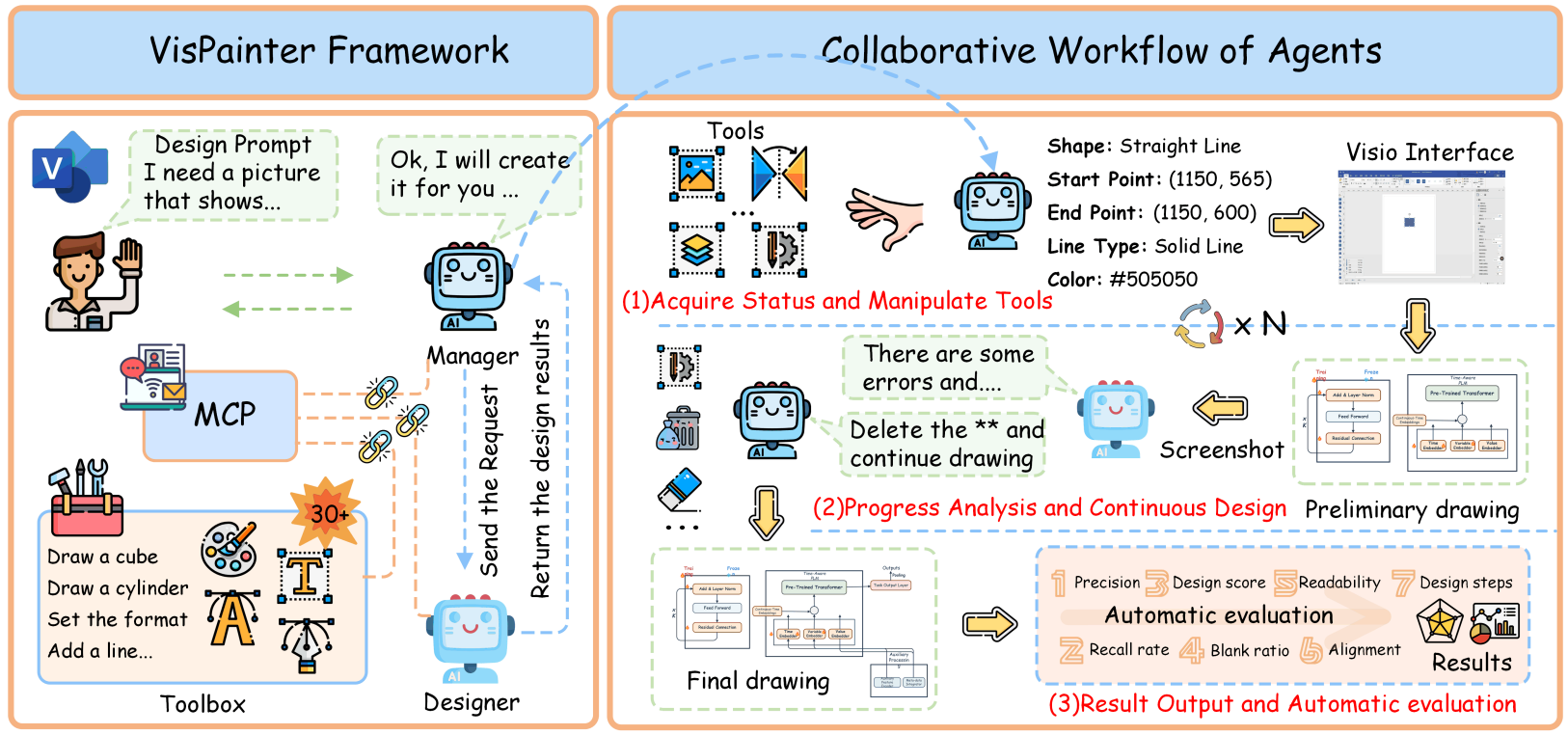

From Pixels to Paths: A Multi-Agent Framework for Editable Scientific Illustration

- Jianwen Sun*, Fanrui Zhang*, Yukang Feng*,

Chuanhao Li, Zizhen Li, Jiaxin Ai, Yifan Chang, Yu Dai, and Kaipeng Zhang+. - [arXiv 2025] [paper]

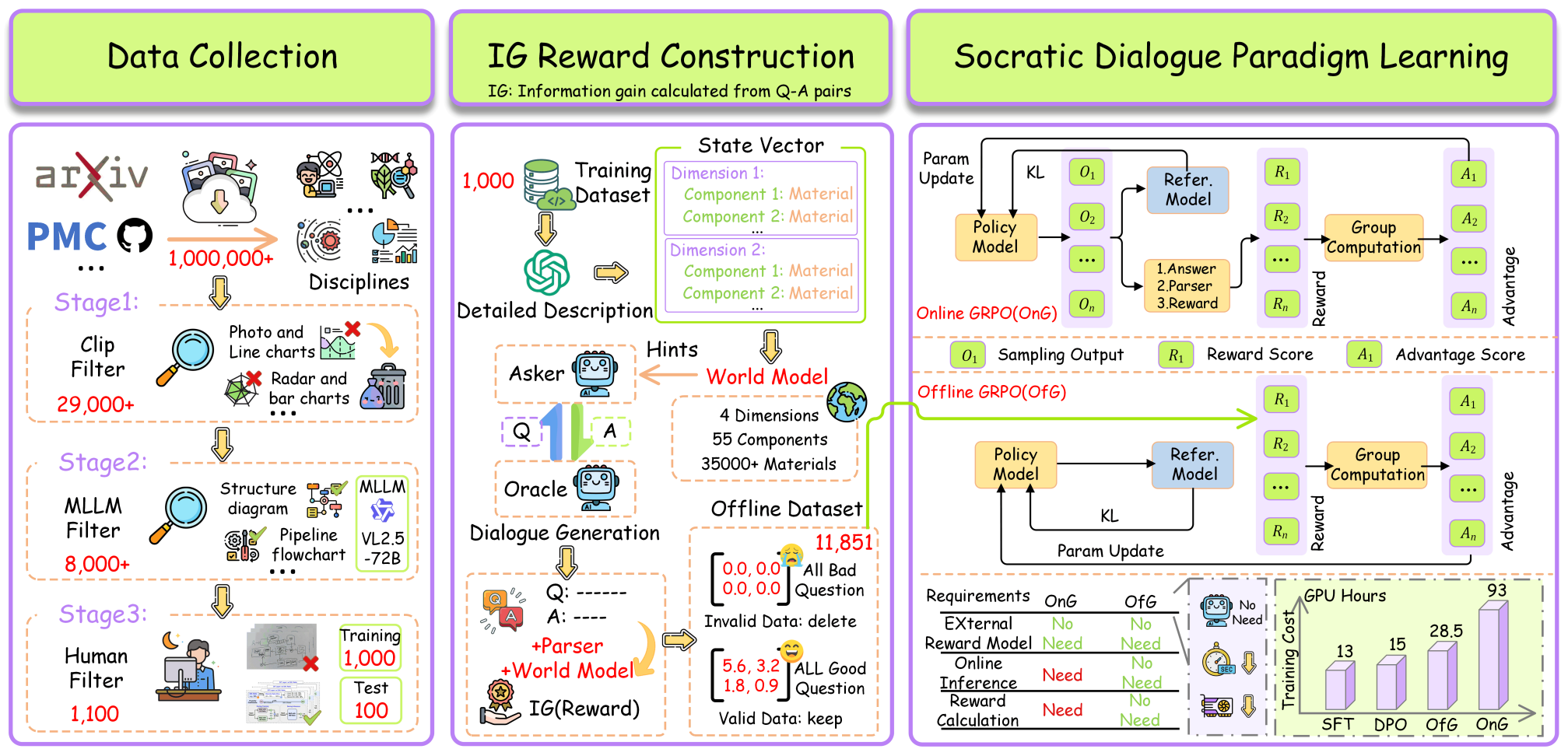

Dialogue as Discovery: Navigating Human Intent Through Principled Inquiry

- Jianwen Sun*, Yukang Feng*, Yifan Chang*,

Chuanhao Li, Zizhen Li, Jiaxin Ai, Fanrui Zhang, Yu Dai, and Kaipeng Zhang+. - [arXiv 2025] [paper]

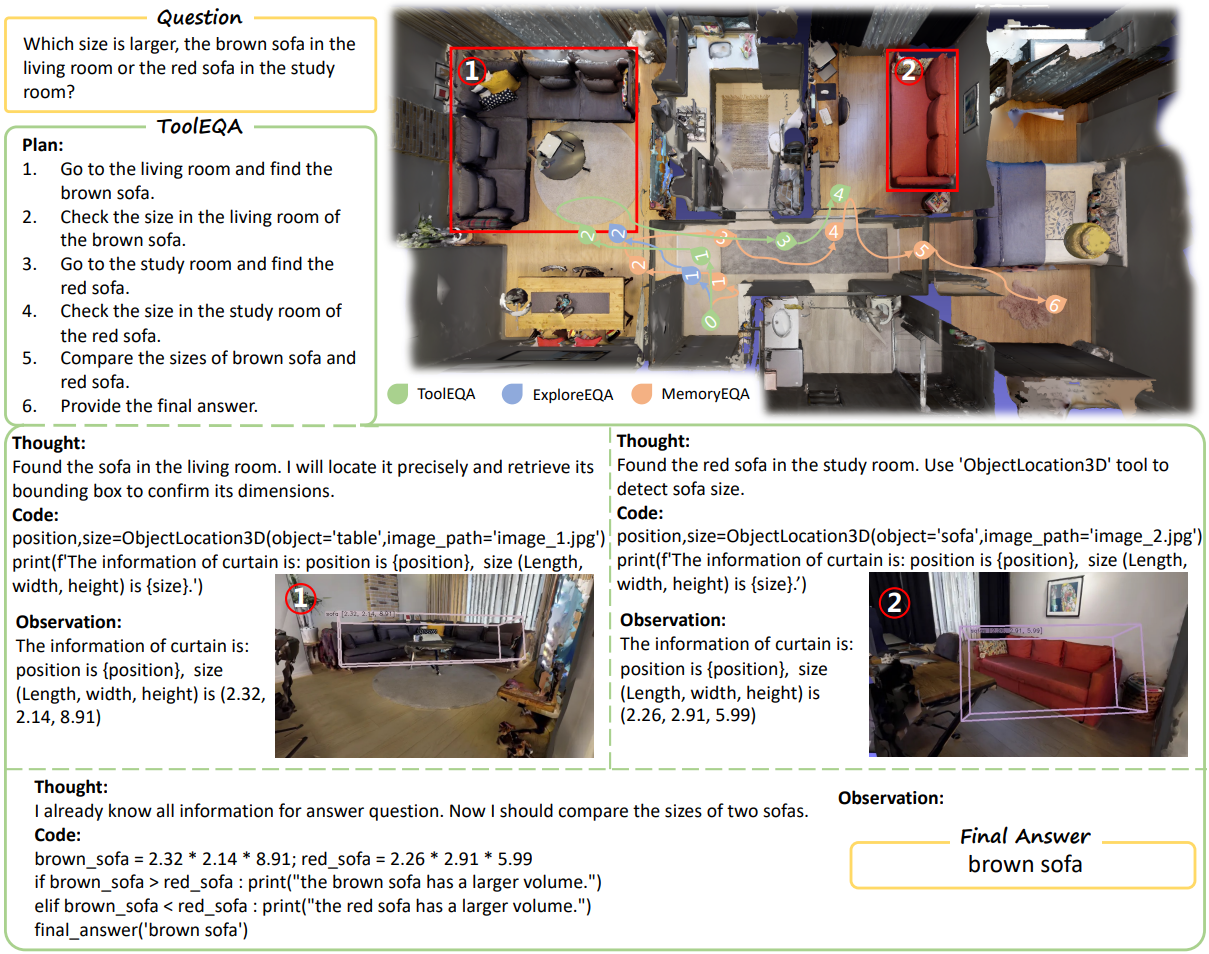

Multi-Step Reasoning for Embodied Question Answering via Tool Augmentation

- Mingliang Zhai*, Hansheng Liang*, Xiaomeng Fan*, Zhi Gao,

Chuanhao Li, Che Sun, Xu Bin, Yuwei Wu, and Yunde Jia. - [arXiv 2025] [paper]

YUME: An Interactive World Generation Model

- Xiaofeng Mao, Shaoheng Lin, Zhen Li,

Chuanhao Li, Wenshuo Peng, Tong He, Jiangmiao Pang, Mingmin Chi+, Yu Qiao, and Kaipeng Zhang+. - [arXiv 2025] [paper] [homepage] [code]

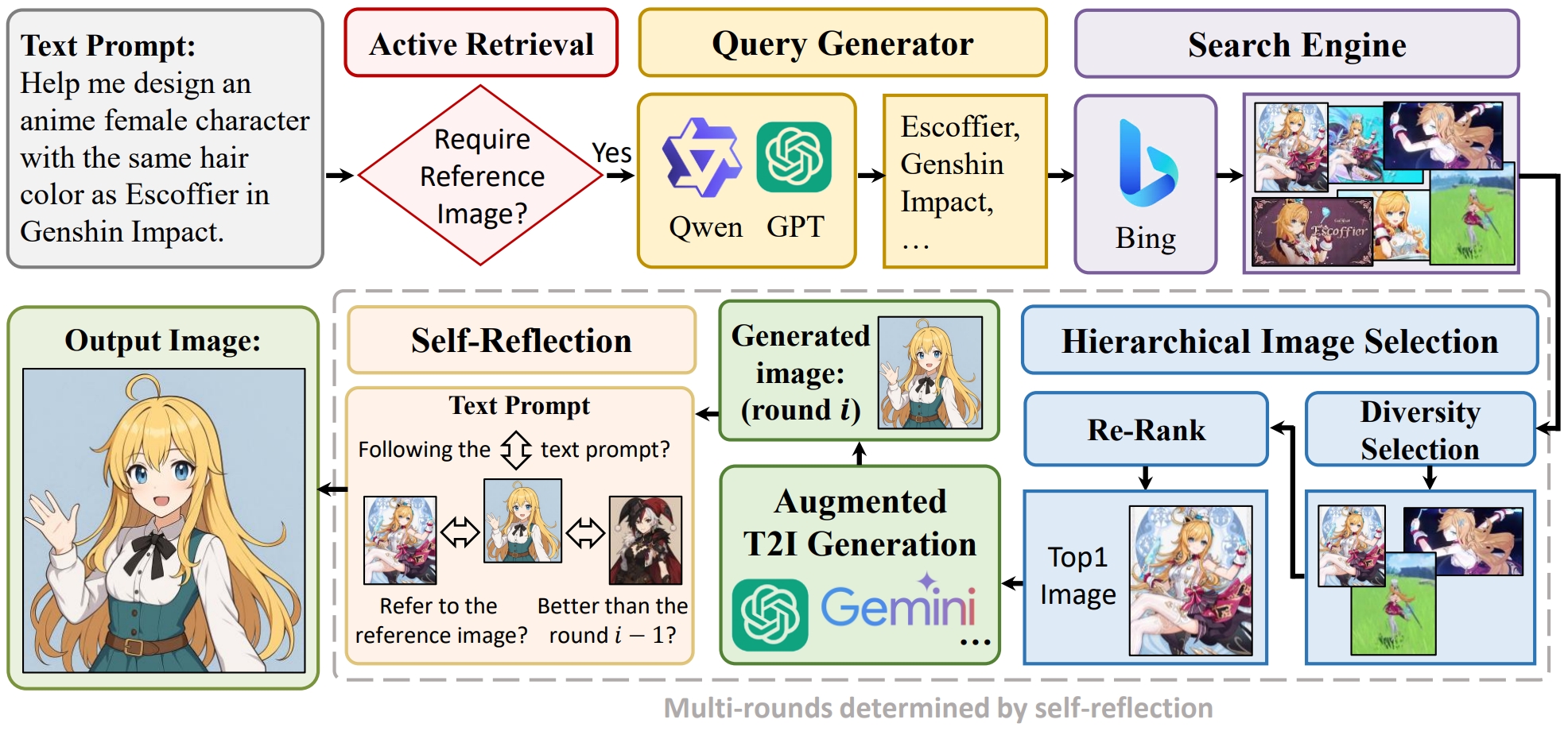

IA-T2I: Internet-Augmented Text-to-Image Generation

Chuanhao Li*, Jianwen Sun*, Yukang Feng*, Mingliang Zhai, Yifan Chang, and Kaipeng Zhang+.- [arXiv 2025] [paper]

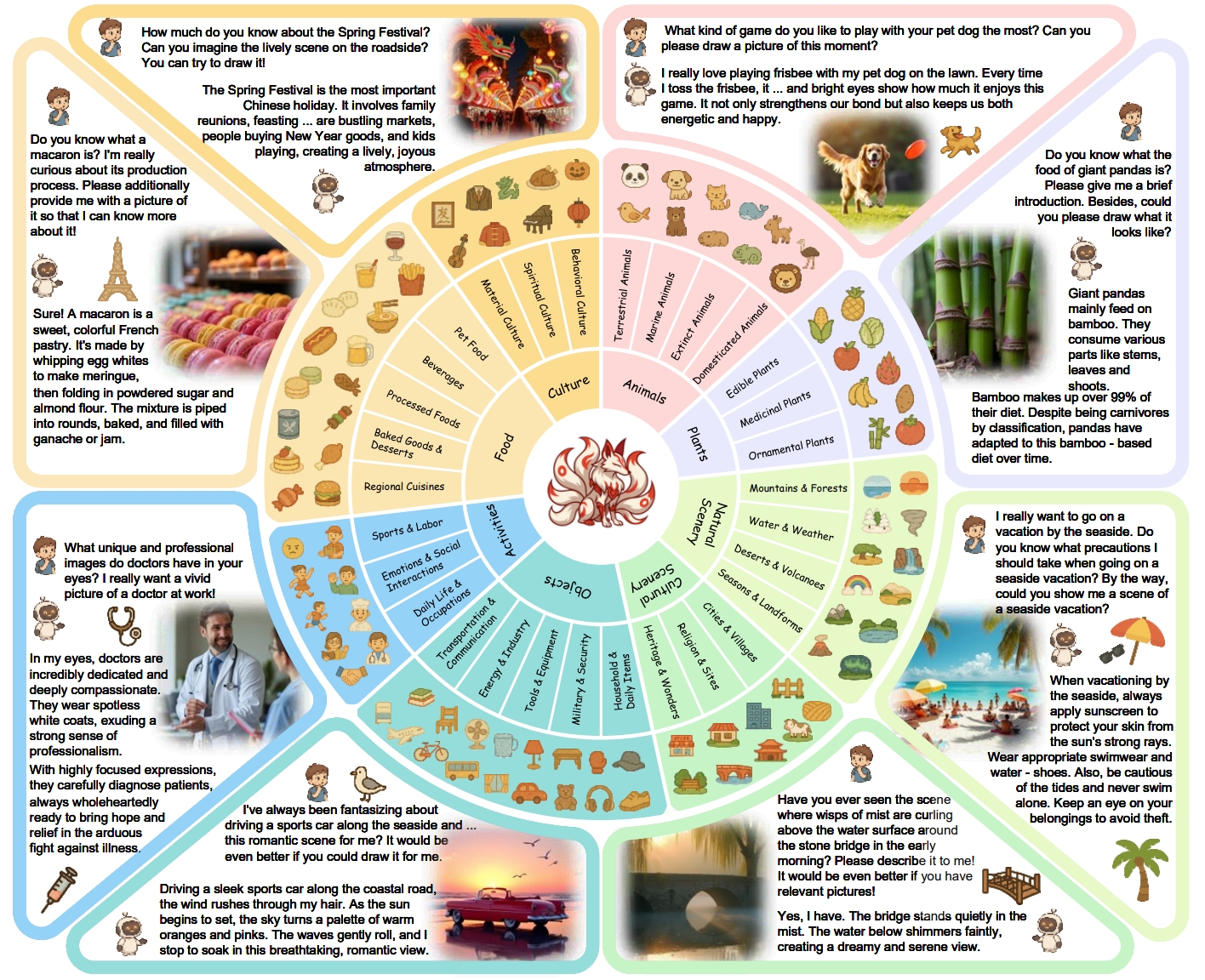

A High-Quality Dataset and Reliable Evaluation for Interleaved Image-Text Generation

- Yukang Feng*, Jianwen Sun*,

Chuanhao Li, Zizhen Li, Jiaxin Ai, Fanrui Zhang, Yifan Chang, Sizhuo Zhou, Shenglin Zhang, Yu Dai, and Kaipeng Zhang+. - [arXiv 2025] [paper]

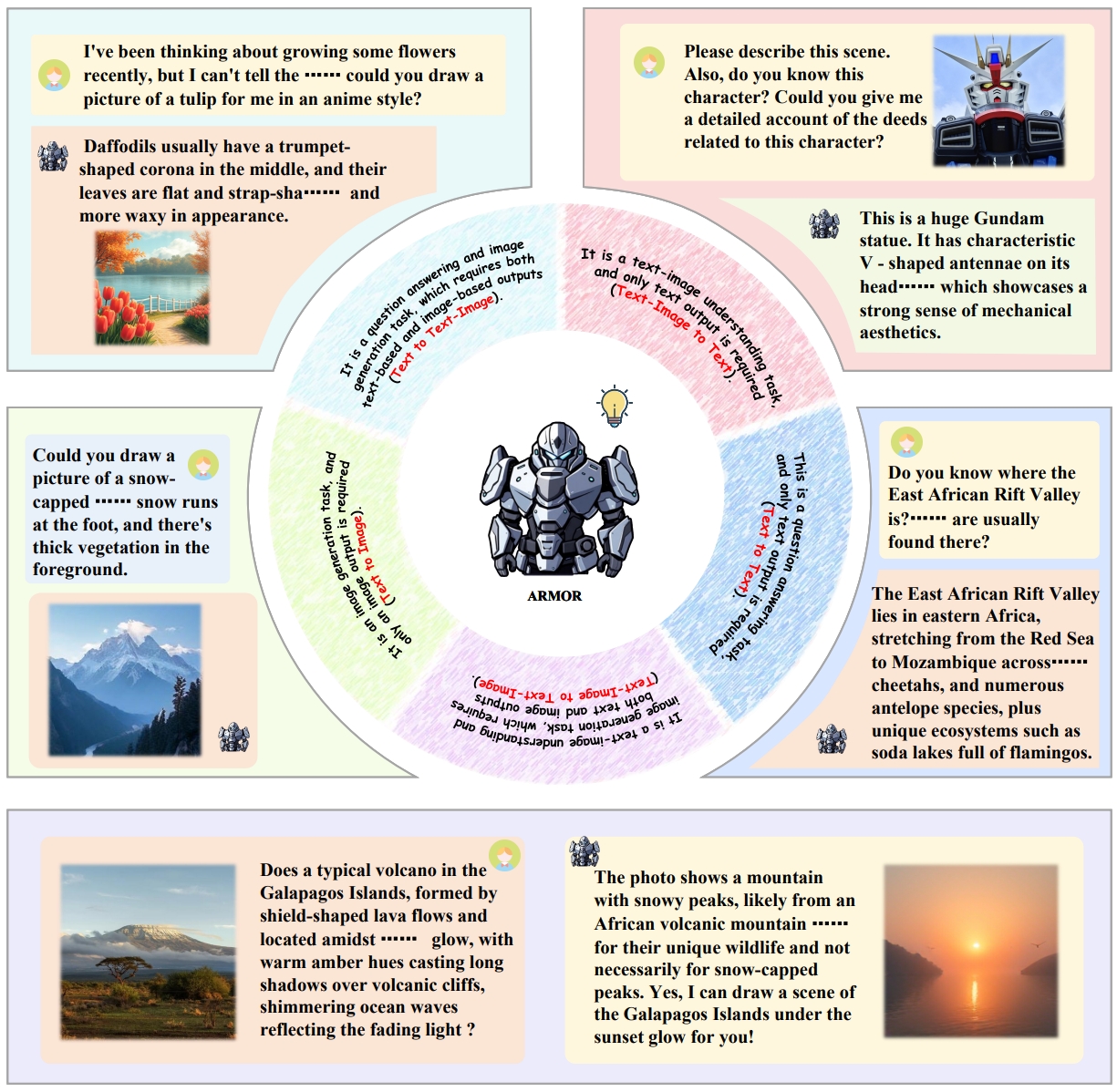

ARMOR: Empowering Autoregressive Multimodal Understanding Model with Interleaved Multimodal Generation via Asymmetric Synergy

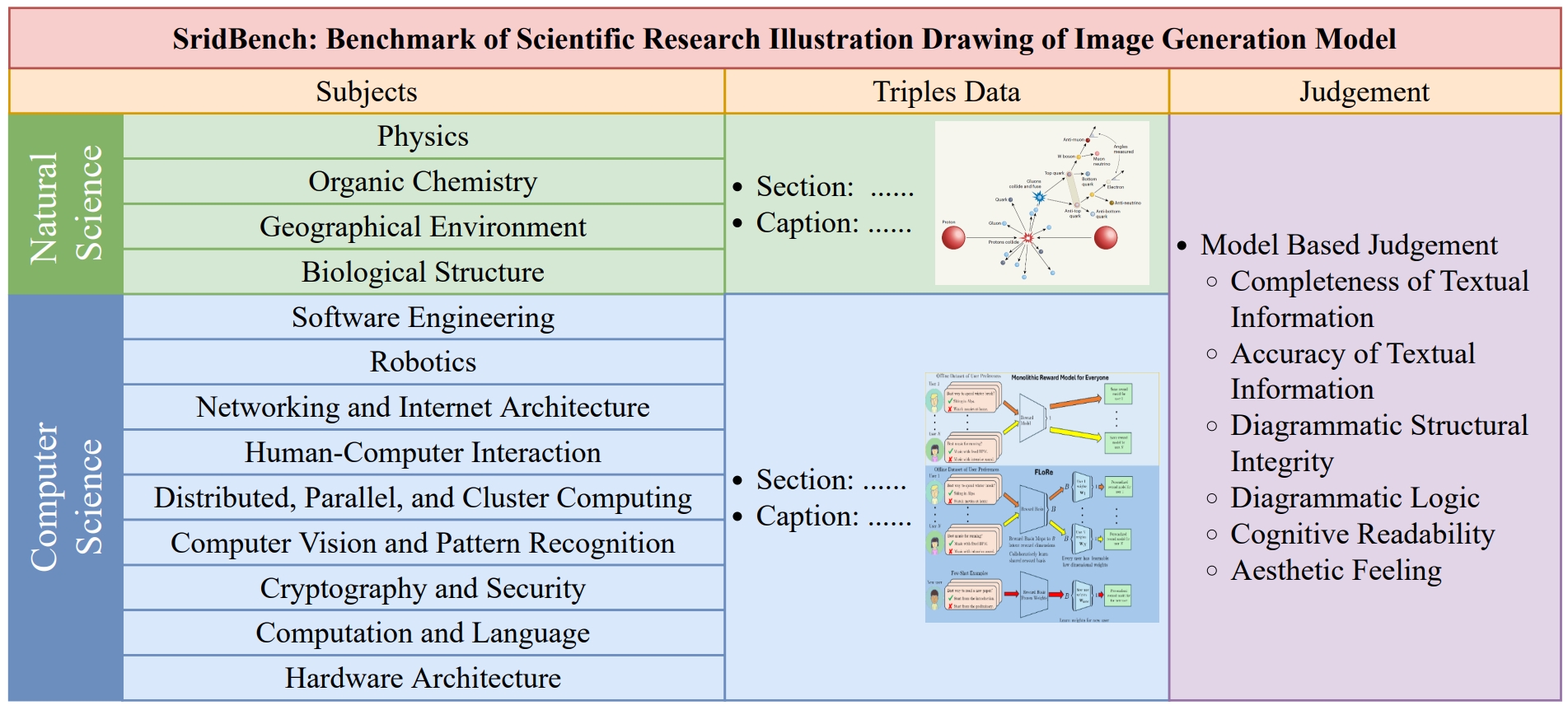

SridBench: Benchmark of Scientific Research Illustration Drawing of Image Generation Model

- Yifan Chang*, Yukang Feng*, Jianwen Sun*, Jiaxin Ai,

Chuanhao Li, S. Kevin Zhou, and Kaipeng Zhang+. - [arXiv 2025] [paper]

📝 Selected Publications

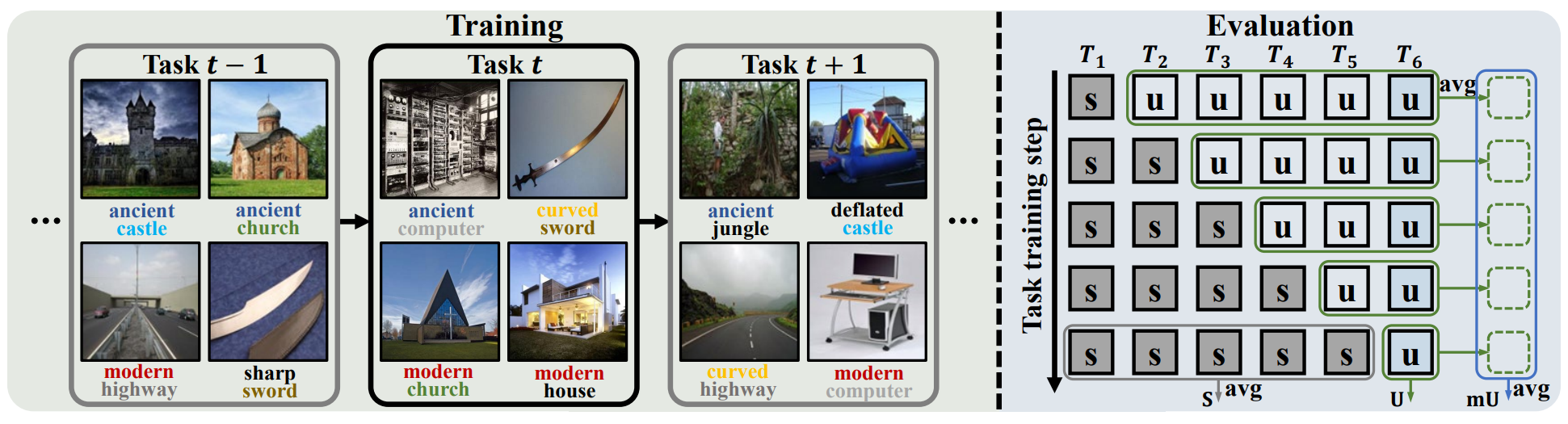

Composition-Incremental Learning for Compositional Generalization

- Zhen Li, Yuwei Wu, Chenchen Jing, Che Sun+,

Chuanhao Li+, and Yunde Jia. - [AAAI 2026] [paper]

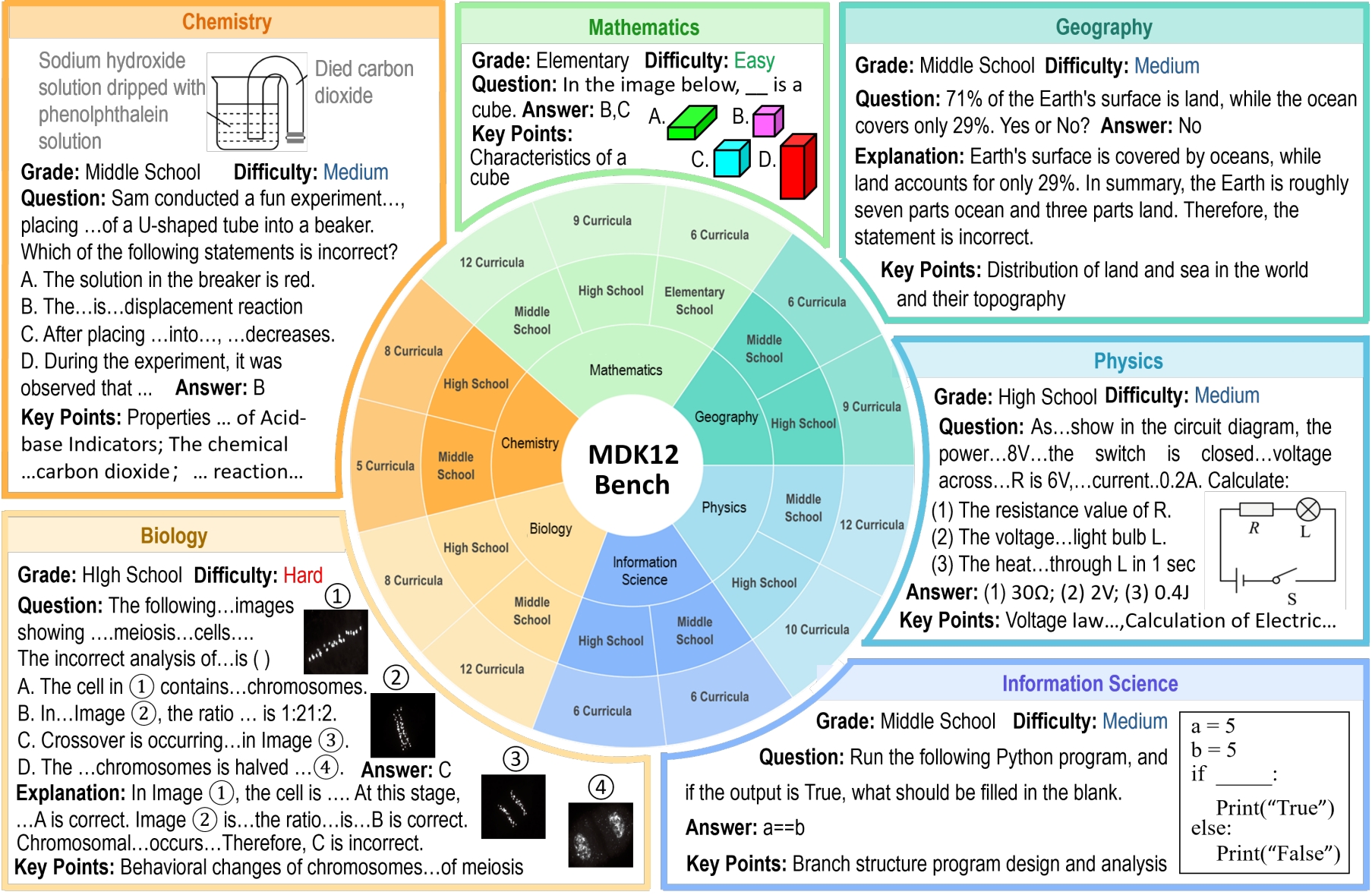

MDK12-Bench: A Multi-Discipline Benchmark for Evaluating Reasoning in Multimodal Large Language Models

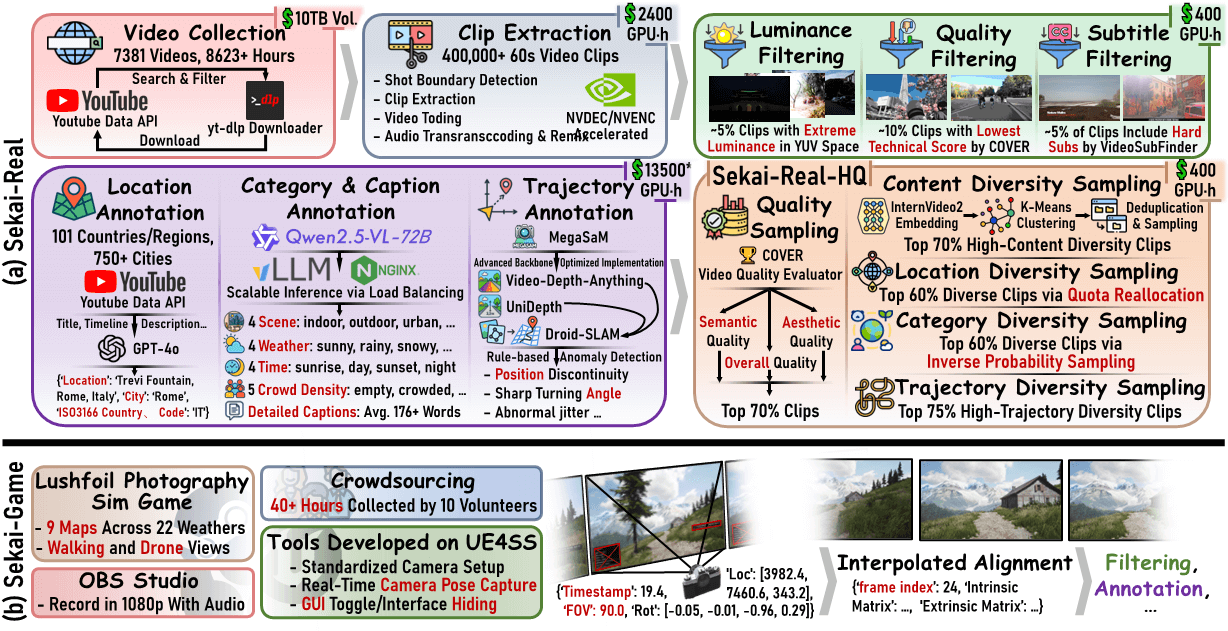

Sekai: A Video Dataset towards World Exploration

- Zhen Li*,

Chuanhao Li*+, …, Yuwei Wu+, Tong He, Jiangmiao Pang, Yu Qiao, Yunde Jia, and Kaipeng Zhang+. - [NeurIPS 2025] [paper] [homepage] [dataset] [code]

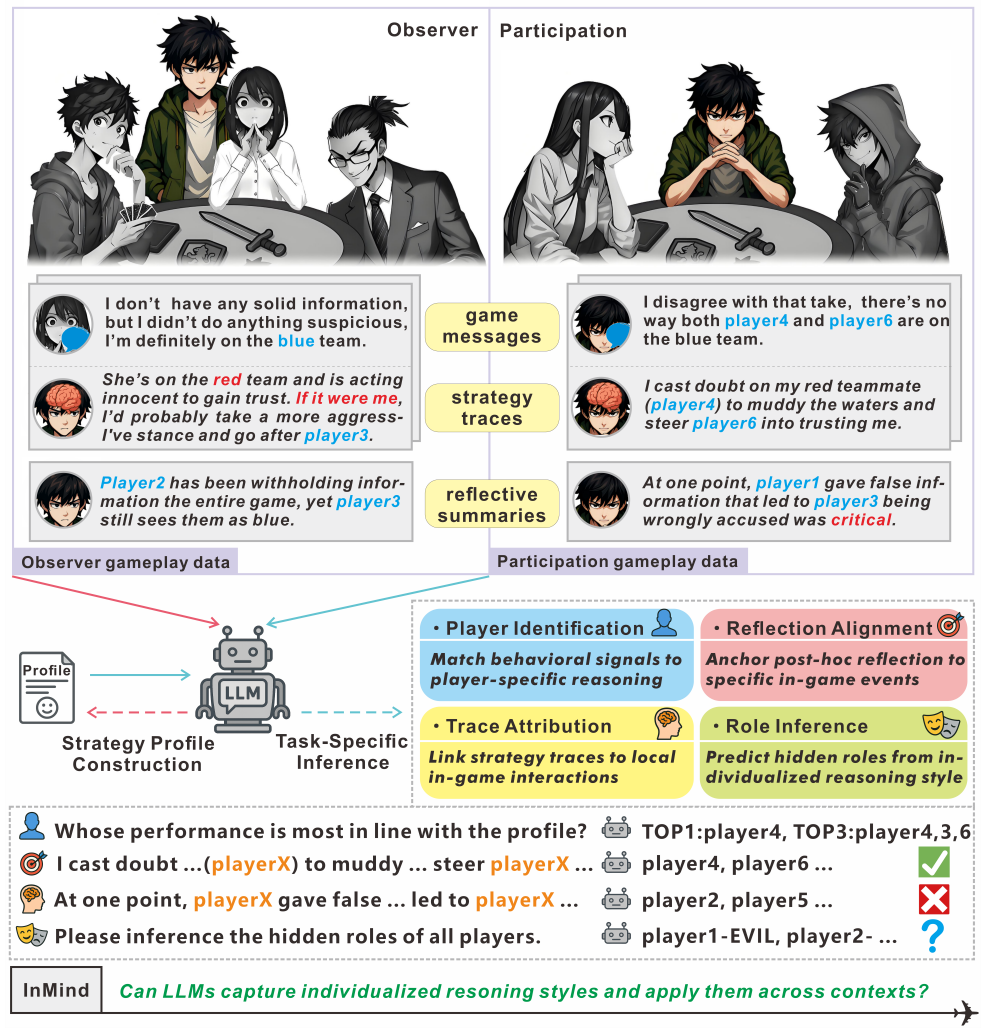

InMind: Evaluating LLMs in Capturing and Applying Individual Human Reasoning Styles

- Zizhen Li,

Chuanhao Li, Yibin Wang, Qi Chen, Diping Song, Yukang Feng, Jianwen Sun, Jiaxin Ai, Fanrui Zhang, Mingzhu Sun, and Kaipeng Zhang+. - [EMNLP 2025] [Main Conference] [paper]

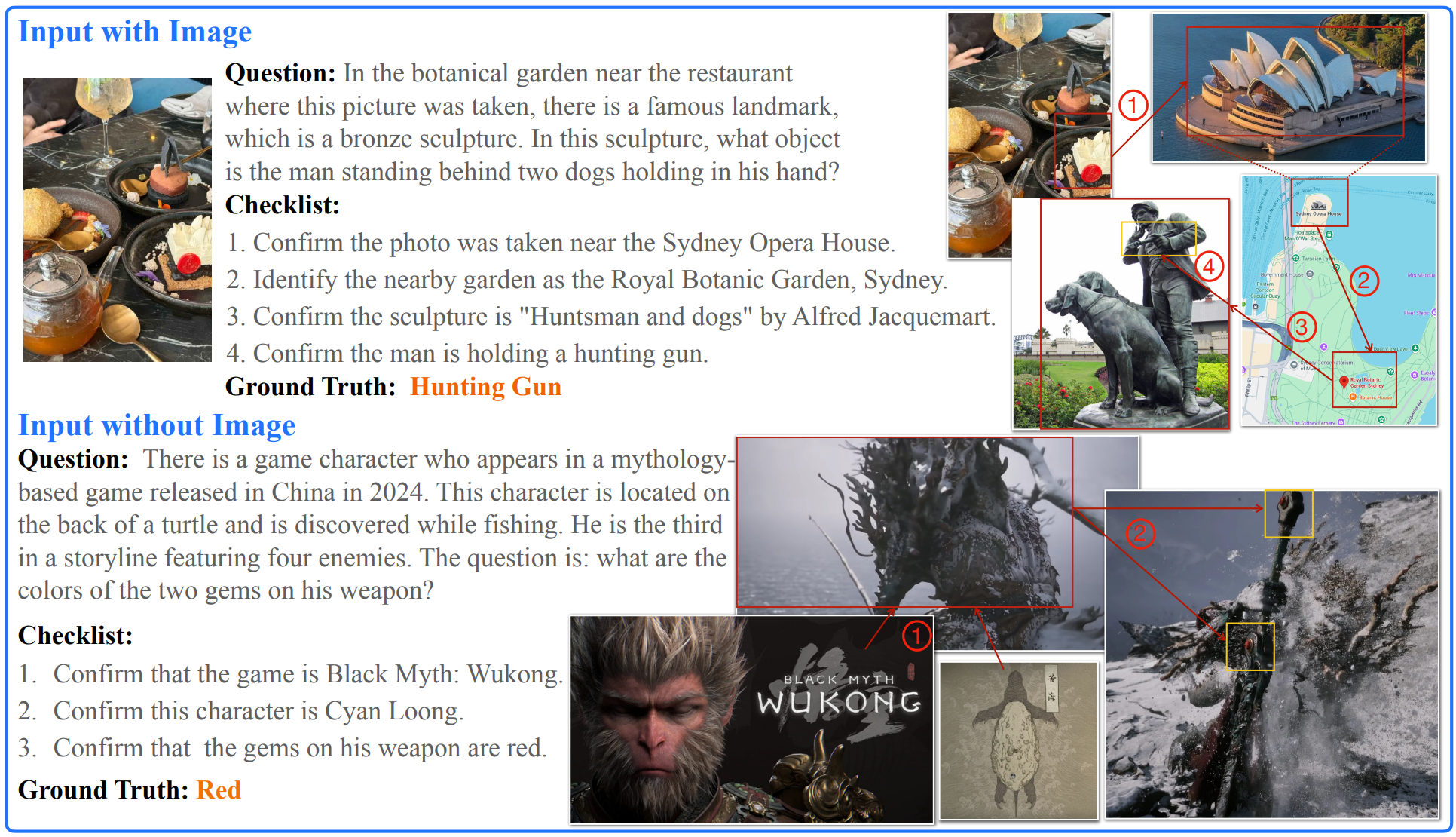

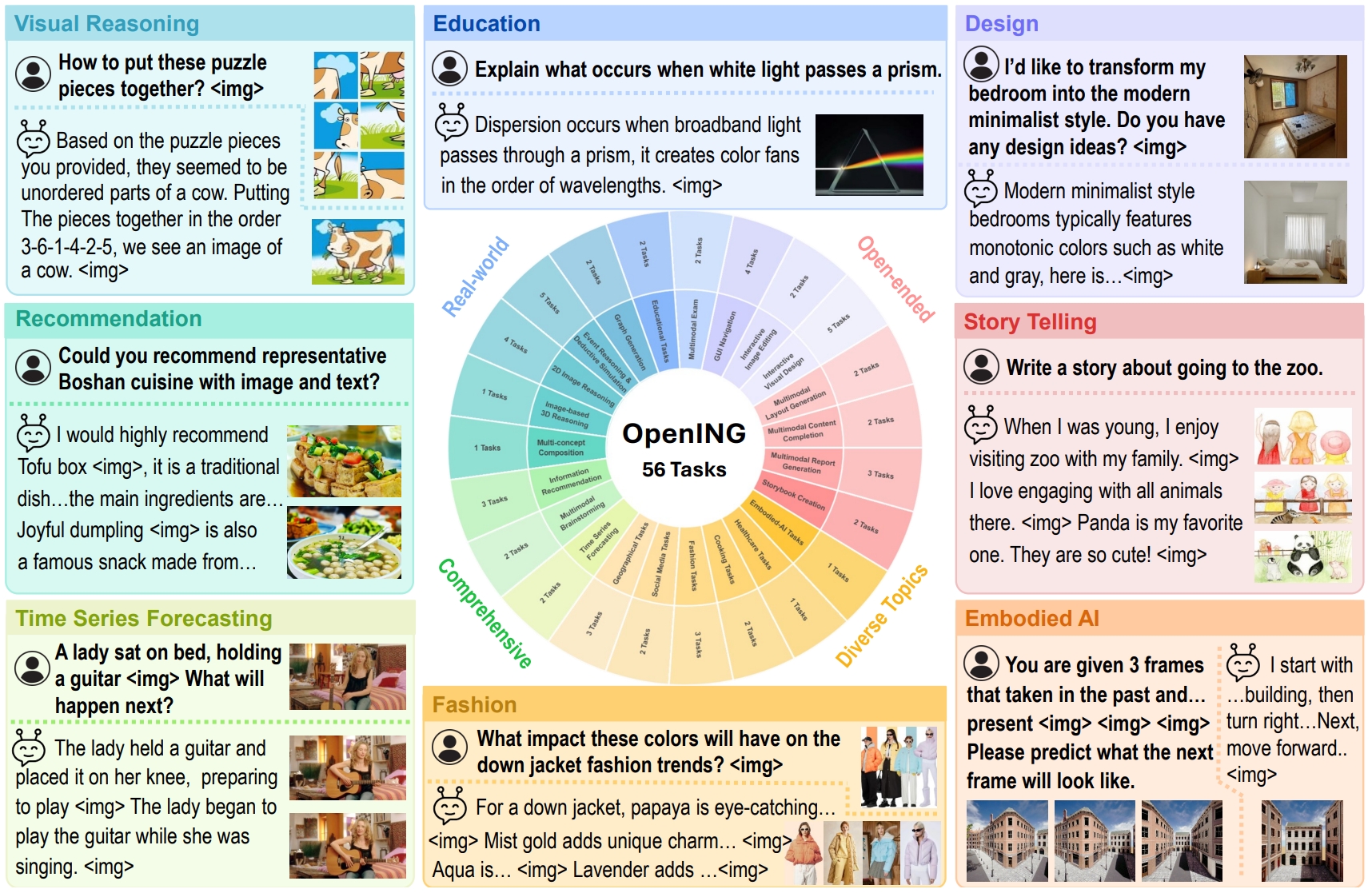

GATE OpenING: A Comprehensive Benchmark for Judging Open-ended Interleaved Image-Text Generation

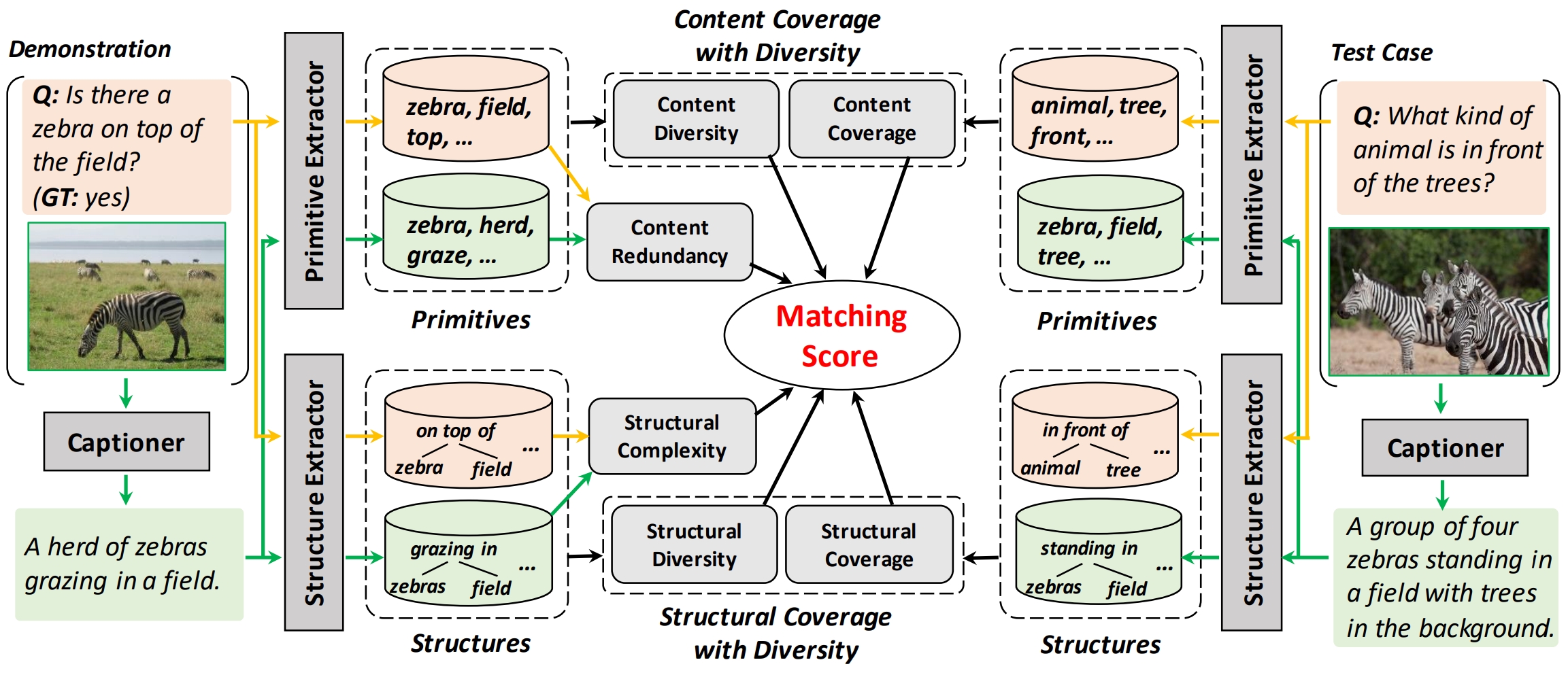

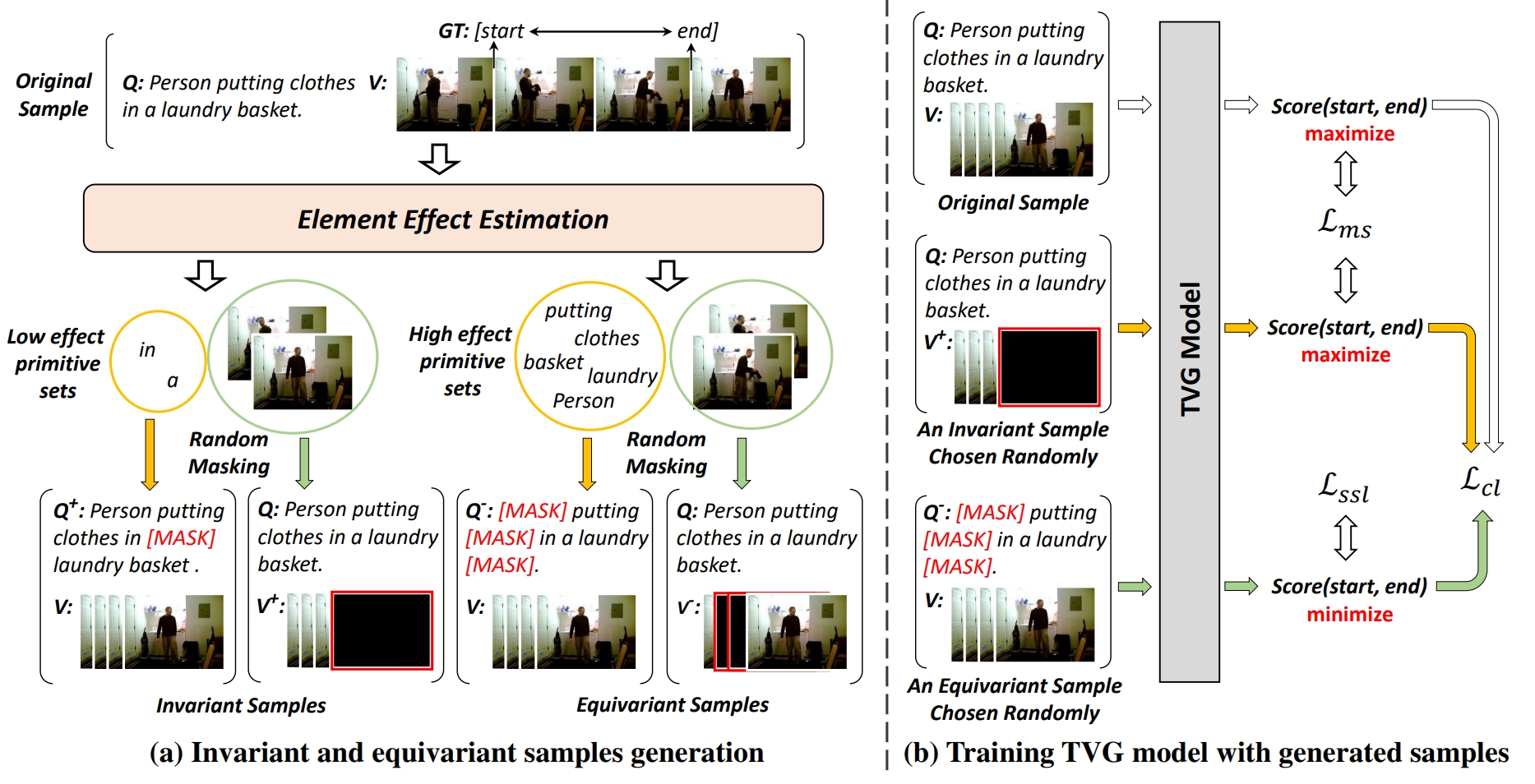

In-Context Compositional Generalization for Large Vision-Language Models

Chuanhao Li, Chenchen Jing, Zhen Li, Mingliang Zhai, Yuwei Wu+, and Yunde Jia.- [EMNLP 2024] [Main Conference] [paper]

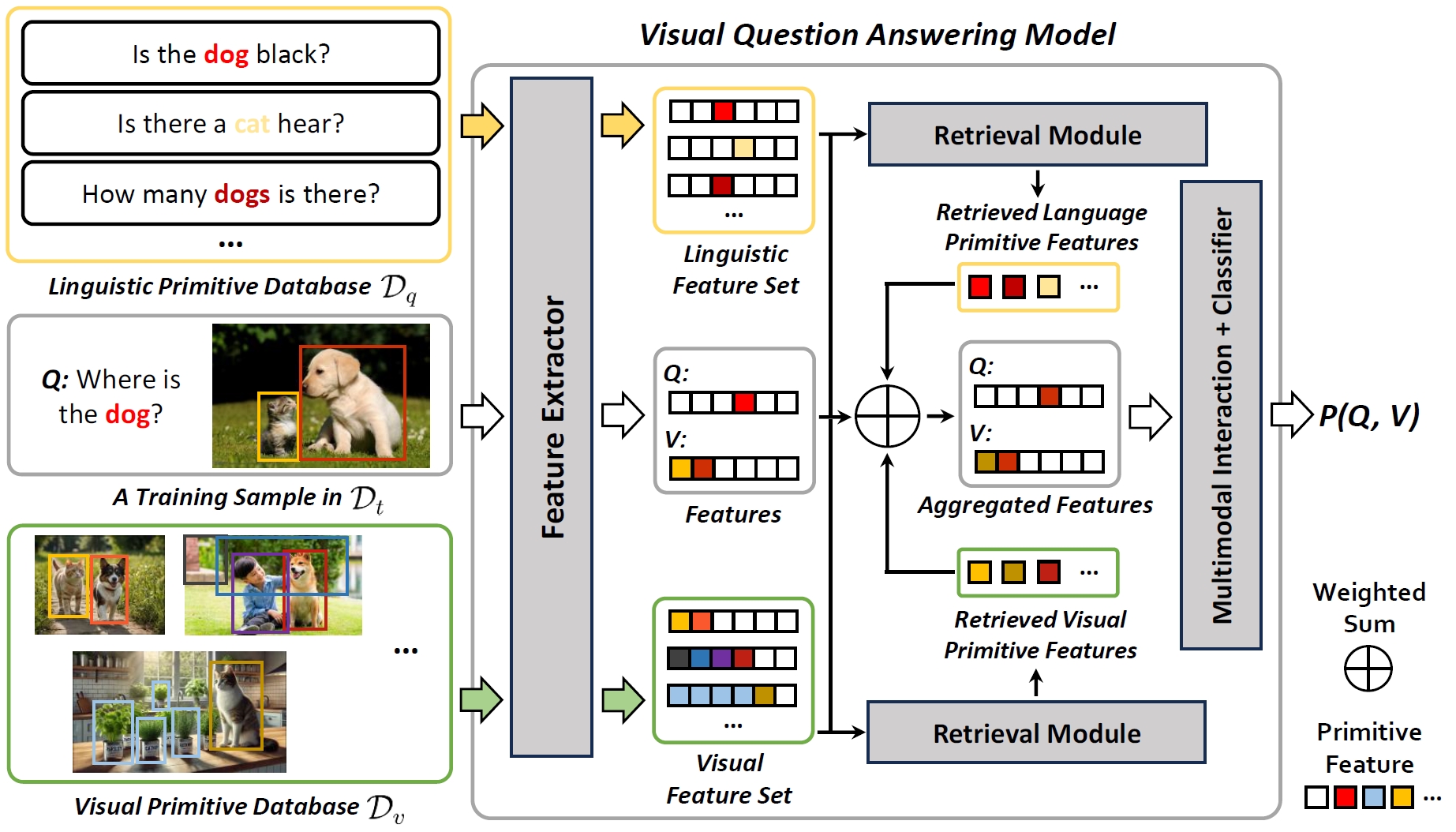

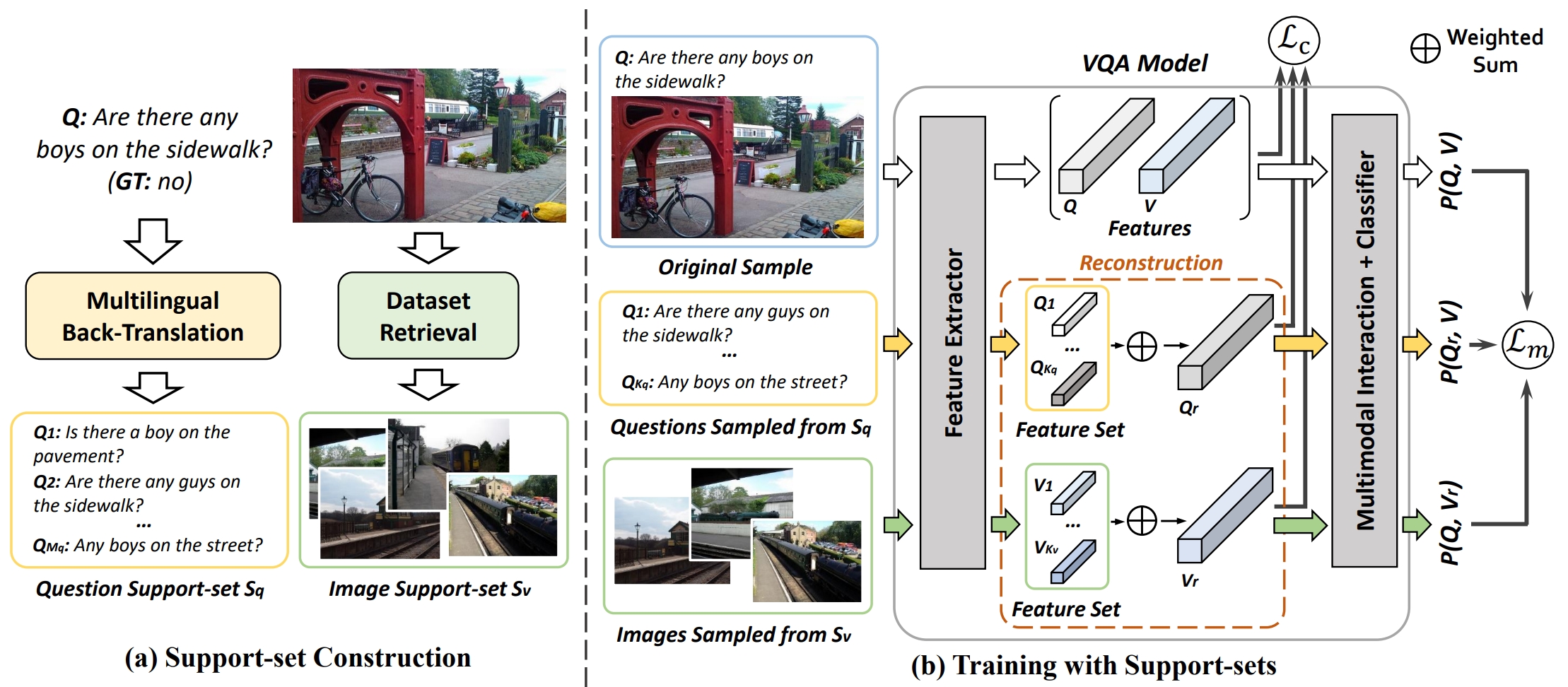

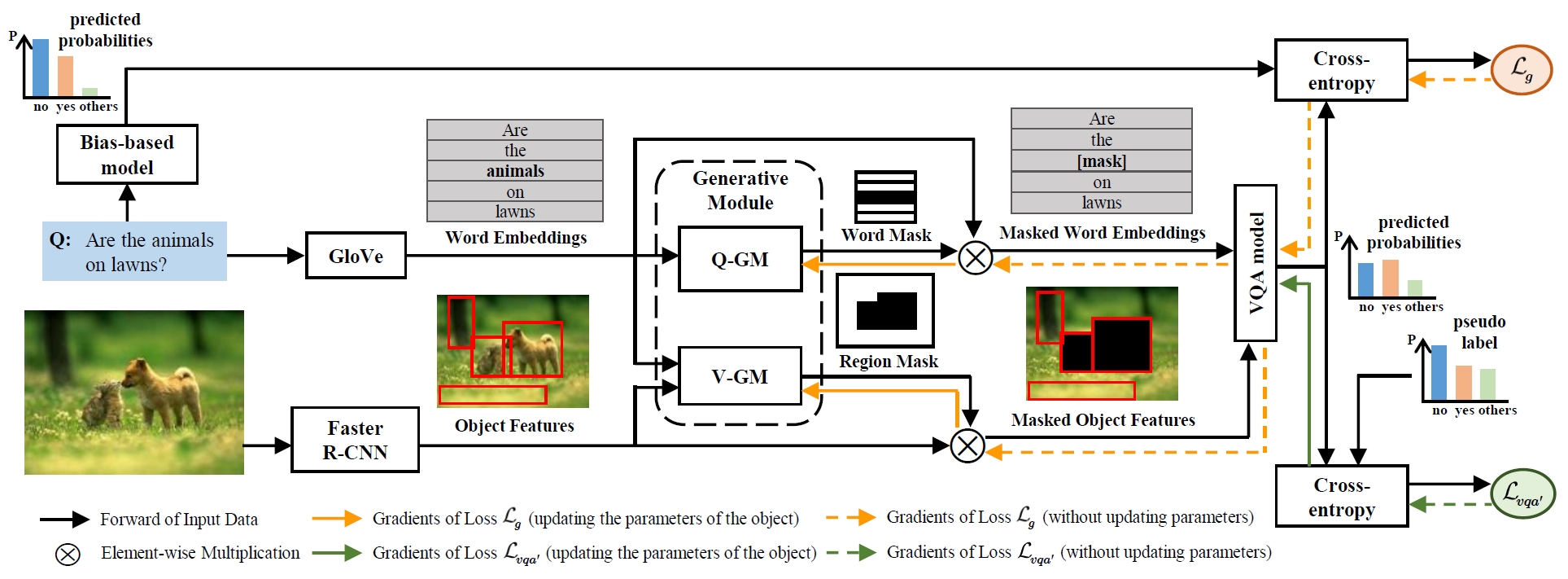

Adversarial Sample Synthesis for Visual Question Answering

Chuanhao Li, Chenchen Jing, Zhen Li, Yuwei Wu+, and Yunde Jia.- [TOMM 2024] [paper]

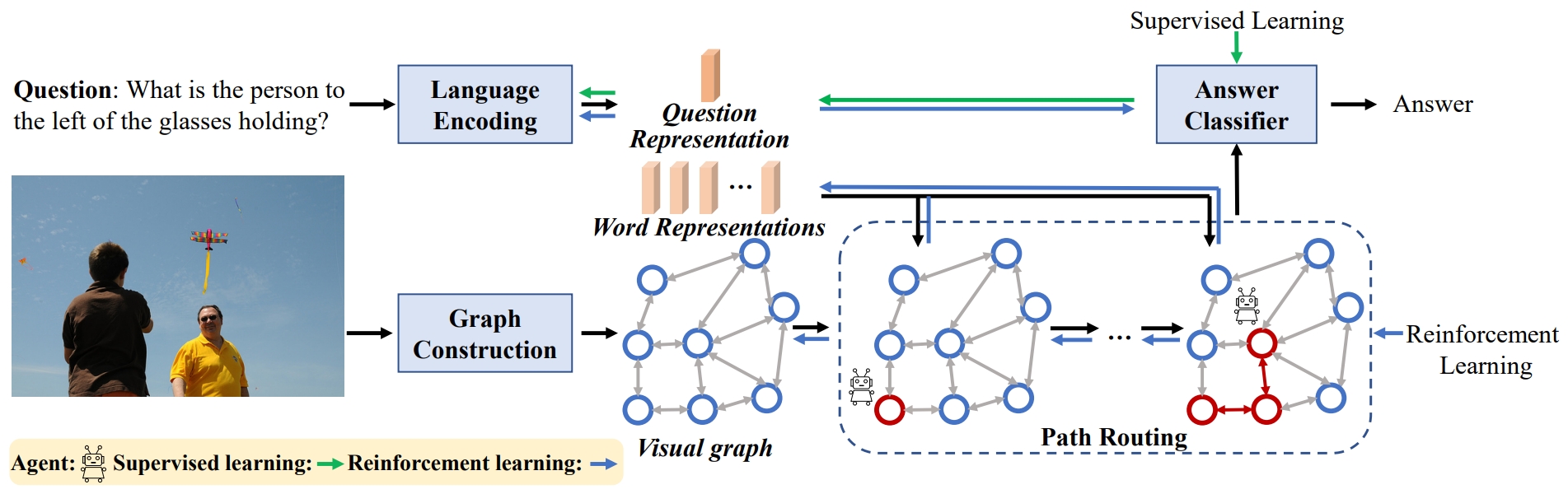

Learning the Dynamics of Visual Relational Reasoning via Reinforced Path Routing

- Chenchen Jing, Yunde Jia, Yuwei Wu,

Chuanhao Li, and Qi Wu. - [AAAI 2022] [paper]

🏅 Selected Awards

- 2023.01, the second prize in the multi-modal technology innovation competition of the first “Xingzhi Cup” National Artificial Intelligence Innovation Application Competition

- 2016.05, the first prize in the CCPC Heilongjiang Collegiate Programming Contest

- 2015.05, the first prize in the CCPC Heilongjiang Collegiate Programming Contest

- 2014.07, the silver medal in the ACM-ICPC Collegiate Programming Contest Shanghai Invitational

🏛️ Academic Activities

- Conference Reviewer of MM2025, ICCV 2025, ICML 2025, IJCAI 2025, CVPR 2024, NeurIPS 2024, MM 2024, etc.

- Journal Reviewer of T-MM.

- Invited Speaker in The 3rd SMBU-BIT Machine Intelligence Graduate Student Forum.

💻 Work Experience

- 2025.04 - Present, Researcher, Shanghai AI Lab, Shanghai, China

- 2024.01 - 2025.04, Intern, Shanghai AI Lab, Shanghai, China

- 2019.07 - 2019.10, Intern, UISEE, Beijing, China